Cognition

links: Psychology reference:

Cognition/Cognitive Science #

Computational Cognitive Neuroscience #

-

https://www.youtube.com/playlist?list=PLu02O8xRZn7xtNx03Rlq6xMRdYcQgEpar 70-part(!) lecture series. I also got a 100-note anki deck! This actually looks fucking crazy honestly. Also has simulation exercises. Resume-worthy? Who knows!

- Might be relevant: https://quizlet.com/KoolKelso12

- https://quizlet.com/140996637/computational-cognitive-neuroscience-flash-cards/

- https://quizlet.com/mochilover007 this guy has his whole computational cognitive neuroscience course; I don’t think it’s the same one though

- https://ccnlab.org/teaching/ccn/

- Synaptic weight is very important: Net ability of a presynaptic neuron to influence others, via its ability to release NT and its ability to open postsynaptic channels, qualitatively and quantitatively

- It may be considered as either the presynaptic’s ability or postsynaptic’s susceptibility (strong weight = sensitive) of the aforementioned dealio.

III Networks #

-

Categorization, bidirectional excitatory dynamics, and inhibitory competition is the name of the game.

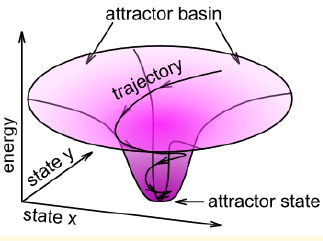

- Complex Systems type beat: bidirectional connectivity can be summarized as attractor dynamic or multiple constraint satisfaction

- Basically an arbitrary point where all nodes/whatever are pulled over a set of time, representing long-term dynamics.

-

- This kind of thing is mediated by lateral inhibition, which reduces sensory overload. Inhibitory competition keeps positive feedback loops and stuff in check.

- Lateral inhibition is meddiated by GABAergic interneurons that fire alongside principal neurons.

- This kind of thing is mediated by lateral inhibition, which reduces sensory overload. Inhibitory competition keeps positive feedback loops and stuff in check.

- Complex Systems type beat: bidirectional connectivity can be summarized as attractor dynamic or multiple constraint satisfaction

-

The cerebral cortex is ~85% exitatory neurons (Ionotropic Glutamate Receptors, but there are glutamatergic stellate cells in layer 4) and 15% being their concomitant GABA interneurons.

- Notice that the pyramidal neurons are not interneurons - they make many long-range connections

-

Thalamic projections primarily terminate at area 4 of their corresponding cortical areas, e.g. primary Visual Cortex.

- Excitatory stellate cells at layer 6 excell at collecting local axonal inputs to the layer.

- FINALLY an actually ankifiable nugget of gold: The thalamus is basically the sole relay between sensorimotor systems and the cerebral cortex, with the exception of the olfactory system.

-

The “hidden” areas not directly associated with sensory inputs, but rather their sophistication, have thicker layers 2-3, containing Pyramidal Neurons.

- This is consistent with the entire brain; there is an “input” section for each brain region and a section where it becomes sophisticated, and further projected to another brain region.

-

“Output” - that is, areas like the Motor Cortex, have deep layers 5-6 since they project to subcortical areas.

-

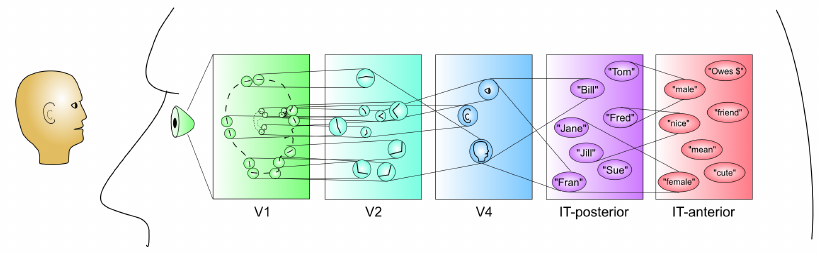

simple if you’re familiar with neural networks. But this is a callback to Hierarchical Complexity. I just can’t help but scoop some of these pictures because they’re kinda cool

simple if you’re familiar with neural networks. But this is a callback to Hierarchical Complexity. I just can’t help but scoop some of these pictures because they’re kinda cool- Bidirectionality is of course essential for recall of the various types of memory.

-

They eye only usese 3 photoreceptors to capture color! It just uses graded potentials for their intensity.

This point forward is cool stuff, but not what I’m looking for at the moment regarding neural correlates.

-

Typically no more than ~15-25% of neurons in any given area are active at a time.

-

Okay so inhibition gives rise to a sort of competitive dynamic, where only the most excitatory neurons thrive in the ‘cognitive arena’ or something. Really? Just interesting that increasing global activity without inhibition would quickly give rise to problems like improper sensory gating - a “high energy” overshooting of attractor dynamics.

- While, the whole “you only use 10% of your brain” thing would lead to the simple conclusion that all you need to do is increase global activity. I think an autistic academic debunking of this obviously-debunked theory could have some gems in it: because maybe we “use 100%” but can we not “use” $\geq$ 101%, or use 100% of it at twice the rate? A statement about the latter would go no further, since that pretty much directly ties in with investigations on intelligence, i.e. nerve conduction velocity

- Like, what does make sense is to just increase activity of both the excitatory and inhibitory interneurons in proportion to one another (as they already are, “producing a similar set point of behavior.”) to increase flux of the ‘feedback’ - but I suppose in a way, they’re already working at “full speed”? Is there a bottleneck here in just this dynamic or must one look elsewhere?

- Intelligence still seems to be more like sophistication and accuracy.

- Because of course, it’s about efficiency - not being in the high-energy attractor state all the time. It may seem counterintuitive in this sense because I just can’t shake the feeling that you might as well try to couple this efficiency with working at the highest bandwidth possible (but of course I’m not sure “bandwidth” is actually a thing)

- But this actually makes sense. Having highly-specified neurons necessarily means they’re for specific functions; nuanced/advanced downstream purposes. Their beauty exactly lies in not being activated all the time; I’ve been sort of conflating intelligence with laterality (which uh is technically correlated)

- Because of course, it’s about efficiency - not being in the high-energy attractor state all the time. It may seem counterintuitive in this sense because I just can’t shake the feeling that you might as well try to couple this efficiency with working at the highest bandwidth possible (but of course I’m not sure “bandwidth” is actually a thing)

- This all sounds like Different levels of category abstraction by different dynamics in different prefrontal areas (especially the hierarchy image above)

- Intelligence still seems to be more like sophistication and accuracy.

-

I don’t think it’s best to think about what it’s saying about “competition” (only the most excited neurons overcome inhibitory signals) at the synapse level, but rather a circuit-network level. This is what interneurons are all about. I’m getting too ahead of myself. It’s especially important with regards to sensory input gating (like looking for somebody in a crowd) and detecting the salience of things.

- But that makes me wonder what’s going on during vipassana scanning where you’re aware of every nano-vibration in your body

- I’m also reminded of autistic savantism, like that guy with the big ass library who knew evey string of text: surely they have abberant inhibitory interneurons.

-

Feedback inhibition: when excitatory neurons fire, they send potential to glutamatergic synapses on inhibitory interneurons, which basically loops back to them, releasing GABA (on the excitatory neurons) driving $\ce{Cl^-}$ influx.

-

Feedforward inhibition: Excitatory inputs on excitatory neurons drives interneurons to inhibit in proportion to the excitatory input; this is a metric of the actual activation output, instead of net excitatory.

Learning #

Neuroplasticity is defined here simply as the modification of synaptic weights via learning - driven by the level of postsynaptic Ca2+.

-

Both sending and receiving inputs drives Ca2+ influx via NMDA channels.

-

Self-organizing learning is a statistical refinement of the internal model, as opposed to error-driven learning which is from direct contrast between expectation and outcome. Really the only difference here is time scale.

-

After depolarization of postsynaptic AMPAR (via Na+ - from sensory/interneurons??) the Mg2+ blockage is removed and then, presynaptic releases glutamate (firing an action potential), allowing Ca2+ after binding to NMDAR. I know all that, but what’s interesting is how the Ca2+ concentration in the postsynaptic dendritic spine alters chemical reactions that dictate both efficacy and number of AMPA receptors. I’m guessing they downregulate if it’s a high concentration. But this right here is basically what is meant by plasticity - an increase and decrease in AMPA efficacy is LTP and LDP respectively (and therefore calcium concentration). This is on the scale of hundreds of miliseconds.

From here on out things get hella computational.